| static | ||

| LICENSE.txt | ||

| NOTICE.txt | ||

| README.org | ||

OcapPub: Towards networks of consent

- Conceptual overview

- Understanding object capabilities (ocaps)

- How to build it

- Limitations

- Future work

- Conclusions

This paper released under the Apache License version 2.0; see LICENSE.txt for details.

For a broader overview of various anti-spam techniques, see AP Unwanted Messages, which is in many ways informed this document but currently differs in some implementation rollout differs. (These two documents may converge.)

Conceptual overview

The federated social web is living in its second golden age, after the original success of StatusNet and OStatus in the late 2000s. A lot of this success has been around unification of adoption of a single protocol, ActivityPub, to connect together the many different instances and applications into a unified network.

Unfortunately from a security and social threat perspective, the way ActivityPub is currently rolled out is under-prepared to protect its users. In this paper we introduce OcapPub, which is compatible with the original ActivityPub specification. With only mild to mildly-moderate adjustments to the existing network, we can deliver what we call "networks of consent": explicit and intentional connections between different users and entities on the network. The idea of "networks of consent" is then implemented on top of a security paradigm called "object capabilities", which as we will see can be neatly mapped on top of the actor model, on which ActivityPub is based. While we do not claim that all considerations of consent can be modeled in this or any protocol, we believe that the maximum of consent that is possible to encode in such a system can be encoded.

Paradoxically, what may initially appear to be a restriction actually opens up the possibility of richer interactions than were previously possible on the federated social web while better preserving the intentions of users on the network.

ActivityPub

ActivityPub is a federated social network protocol. It is generally fairly easily understood by reading the Overview section of the standard. In short, just as anyone can host their own email server, anyone can host their own ActivityPub server, and yet different users on different servers can interact. At the time of writing, ActivityPub is seeing major uptake, with several thousand nodes and several million registered users (with the caveat that registered users is not the same as active users). The wider network of ActivityPub-using programs is often called "the fediverse" (though this term predates ActivityPub, and was also used to describe adoption of its predecessor, OStatus).

ActivityPub defines both a client-to-server and server-to-server protocol, but at this time the server-to-server protocol is what is most popular and is the primary concern of this article.

ActivityPub's core design is fairly clean, following the actor model. Different entities on the network can create other actors/objects (such as someone writing a note) and communicate via message passing. A core set of behaviors are defined in the spec for common message types, but the system is extensible so that implementations may define new terms with minimal ambiguity. If two instances both understand the same terms, they may be able to operate using behaviors not defined in the original protocol. This is called an "open world assumption" and is necessary for a protocol as general as ActivityPub; it would be extremely egotistical of the ActivityPub authors to assume that we could predict all future needs of users.1

Unfortunately (mostly due to time constraints and lack of consensus), even though most of what is defined in ActivityPub is fairly clean/simple, ActivityPub needed to be released with "holes in the spec". Certain key aspects critical to a functioning ActivityPub server are not specified:

- Identity verification is not specified. ("Identity verification" is the same as "authentication", but since "authentication" sounds confusingly too similar to "authorization", we are not generally using that term in this document.) Identify verification is important to verify "did this entity really say this thing".2 However, the community has mostly converged on using HTTP Signatures to sign requests when delivering posts to other users. The advantage of HTTP Signatures is that they are extremely simple to implement and require no normalization of message structure; simply sign the body (and some headers) as-you-are-sending-it. The disadvantage of HTTP Signatures is that this signature does not "stick" to the original post and so cannot be "carried around" the network. A minority of implementations have implemented some early versions of Linked Data Proofs (formerly known as "Linked Data Signatures"), however this requires access to a normalization algorithm that not all users have a library for in their language, so Linked Data Proofs have not as of yet caught on as popularly as HTTP Signatures.

- Authorization is also not specified. As of right now, authorization tends to be extremely ad-hoc in ActivityPub systems, sometimes as ad-hoc as unspecified heuristics from tracking who received messages previously, who sent a message the first time, and so on. The primary way this is worked around is sadly that interactions which require richer authorization simply have not been rolled out onto the ActivityPub network.

Compounding this situation is the general confusion/belief that authorization must stem from identity verification (again, partly because "authentication" is often used for "identity verification", and that term sounds in English too similar to "authorization"). This document aims to show that not only is this not true, it is also a dangerous assumption with unintended consequences. An alternative approach based on "object capabilities" is demonstrated, showing that the actor model itself, if we take it at its purest form, is itself already a sufficient authorization system.

Unfortunately there is a complication.

At the last minute of ActivityPub's standardization, sharedInbox was

added as a form of mutated behavior from the previously described

publicInbox (which was a place for servers to share public content).

The motivation of sharedInbox is admirable: while ActivityPub is based

on explicit message sending to actors' inbox endpoints, if an actor

on server A needs to send a message to 1000 followers on server B,

why should server A make 1000 separate requests when it could do it

in one?

A good point, but the primary mistake in how this one request is made;

rather than sending one message with a listing of all 1000 recipients

on that server (which would preserve the actor model integrity),

it was advocated that servers are already tracking follower information,

so the receiving server can decide whom to send the message to.

Unfortunately this decision breaks the actor model and also our suggested

solution to authorization; see MultiBox for a suggestion on how we

can solve this.

This is more serious than it seems; we cannot proceed to make the system

much safer to use without throwing out sharedInbox since we will

lose our ability to make intentional, directed messages.

Despite these issues, ActivityPub has achieved major adoption. ActivityPub has the good fortune that its earliest adopters tended to be people who cared about human rights and the needs of marginalized groups, and spam has been relatively minimal.

The mess we're in

Mastodon Is Like Twitter Without Nazis, So Why Are We Not Using It? – Article by Sarah Jeong, which drove much interest in adoption of Mastodon and the surrounding "fediverse"

At the time this article was written about Mastodon (by far the most popular implementation of ActivityPub, and also largely responsible for driving interest in the protocol amongst other projects), its premise was semi-true; while it wasn't that there were no neo-nazis on the fediverse, the primary group which had driven recent adoption were themselves marginalized groups who felt betrayed by the larger centralized social networks. They decided it was time for them to make homes for themselves. The article participated in an ongoing narrative that (from the author's perspective) helped reinforce these community norms for the better.

However, there is nothing about Mastodon or the fediverse at large (including the core of ActivityPub) which specifically prevents nazis or other entities conveying undesirable messages (including spam) from entering the network; they just weren't there or were in small enough numbers that instance administrators could block them. However, the fediverse no longer has the luxury of claiming to be neo-nazi free (if it ever could). The risk that people from marginalized groups, which the fediverse has in recent history appealed to, are now at risk from targeted harassment from these groups. Even untargeted messages, such as general hate speech, may have a severe negative impact on one's well being. Spam, likewise, is an increasing topic of administrators and implementers (as it has historically been for other federated social protocols, such as email/SMTP and OStatus during its heyday). It appears that the same nature of decentralized social networks in allowing marginalized communities to make communities for themselves also means that harassment, hate speech, and spam are not possible to wholly eject from the system.

Must all good things come to an end?

Unwanted messages, from spam to harassment

One thing that spam and harassment have in common is that they are the delivery of messages that are not desired by their recipient. However, it would be a mistake to claim that the impact of the two are the same: spam is an annoyance, and mostly wastes time; harassment wastes time, but may also cause trauma.

Nonetheless, despite the impact of spam and harassment being very different, the solutions are likely very similar. Unwanted messages tend to come from unwanted social connections. If the problem is users receiving unwanted messages, perhaps the solution comes in making intentional social connections. But how can we get from here to there?

Freedom of speech also means freedom to filter

As an intermediate step, we should throw out a source of confusion: what is "freedom of speech"? Does it mean that we have to listen to hate speech?

We can start by saying that freedom of speech and the freedom of assembly are critical tools. Indeed, these are some of the few tools we have against totalitarian authorities, of which the world is increasingly threatened by.

Nonetheless, we are under severe threat from neo-fascists. Neo-fascists play an interesting trick: they exercise their freedom of speech by espousing hate speech and, when people say they don't want to listen to them, say that this is censorship.

Except that freedom of speech merely means that you have the freedom to exercise your speech, somewhere. It does not mean that everyone has to listen to you. You also have the right to call someone an asshole, or stop listening to them. There is no requirement to read every spam that crosses your email inbox to preserve freedom of speech; neither is there to listen to someone who is being an asshole. The freedom to filter is the complement to freedom of speech. This applies to both individuals and to communities.

Indeed, the trick of neo-fascists ends in a particularly dangerous hook: they are not really interested in freedom of speech at all. They are interested in freedom of their speech, up until the point where they can gain enough power to prevent others from saying things they don't like. This is easily demonstrated; see how many people on the internet are willing to threaten women and minorities who exercise the smallest amount of autonomy, yet the moment that someone calls them out on their own bullshit, they cry censorship. Don't confuse an argument for "freeze peach" for an argument for "free speech".

Still, what can we do? Perhaps we cannot prevent assholes from joining the wider social network… but maybe we can develop a system where we don't have to hear them.

Did we borrow the wrong assumptions?

"What if we're making the wrong assumptions about our social networks? What if we're focusing on breadth, when we really should be focusing on depth?" – approximate quote from a conversation with Evan Prodromou, initial designer of both ActivityPub and OStatus' protocol designs

What is Evan trying to say here? Most contemporary social networks are run by surveillance capitalist organizations; in other words, their business model is based on as much "attention" as possible as they can sell to advertisers. Whether or not capitalism is a problem is left as an exercise for the reader, but hopefully most readers will agree that a business model based on destroying privacy can lead to undesirable outcomes. One such undesirable outcome is that these companies subtly affect the way people interact with each other not dependent on what is healthiest for people and their social relationships, but based on what will generate the most advertising revenue.

One egregious example of this is the prominence of the "follower count" in contemporary social networks, particularly Twitter. When visiting another user's profile, even someone who is aware of and dislikes its effect will have trouble not comparing follower counts and mentally using this as a value judgement, either about the other person or about themselves. Users are subconsciously tricked into playing a popularity contest, whether they want to play that game or not. Rather than being encouraged to develop a network of meaningful relationships with which they have meaningful communications, users face a subconscious pressure to tailor their messaging and even who else they follow to maximize their follower count.

So why on earth would we see follower counts also appear prominently on the federated social web, if these tools are generally built by teams that do not benefit from the same advertising structure? The answer is simple: it is what developers and users are both familiar with. This is not an accusation; in fact, it is a highly sympathetic position to take: the cost, for developers and users alike, of developing a system is lower by going with the familiar rather than researching the ideal. But the consequences may nonetheless be severe.

So it is too with how we build our notion of security and authorization, which developers tend to mimic from the systems they have already seen. Why wouldn't they? But it may be that these patterns are, in fact, anti-patterns. it may be time for some re-evaluation.

We must not claim we can prevent what we can not

"By leading users and programmers to make decisions under a false sense of security about what others can be prevented from doing, ACLs seduce them into actions that compromise their own security." – From an analysis from Mark S. Miller on whether preventing delegation is even possible

The object capability community has a phrase that is almost, but not entirely, right in my book: "Only prohibit what you can prevent". This seems almost right, except that there may be things that in-bound of a system, we cannot technically prevent, yet we prohibit from occurring anyhow, and which we may enforce at another abstraction layer, including social layers. So here is a slightly modified version of that phrase: "We must not claim we can prevent what we can not."

This is important. There may be things which we strongly wish to prevent on a protocol level, but which are literally impossible to do on only that layer. If we misrepresent what we can and cannot prevent, we open our users to harm when those things that we actually knew we could not prevent come to pass.

A common example of something that cannot be prevented is the copying of information. Due to basic mathematical properties of the universe, it is literally impossible to prevent someone from copying information once they have it on the data transmission layer alone. This does not mean that there aren't other layers where we can't prohibit such activity, but we shouldn't pretend we can prevent it at the protocol layer.

For example, Alice may converse with her therapist over the protocol of sound wave vibrations (ie, simple human speech). Alice may be expressing information that is meant to be private, but there is nothing about speech traveling through the air that prevents the therapist from breaking confidence and gossiping about it to outside sources. But Alice could take her therapist to court, and her therapist could lose her license. But this is not on the protocol layer of ordinary human speech itself. Similarly, we could add a "please keep this private" flag to ActivityPub messages so that Alice could tell Bob to please not share her secrets. Bob, being a good friend, will probably comply, and maybe his client will help him cooperate by default. But "please" or "request" is really key to our interface, since from a protocol perspective, there is no guarantee that Bob will comply. However this does not mean there are no consequences for Bob if he betrays Alice's trust: Alice may stop being his friend, or at least unfollow him.

Likewise, it is not possible to attach a protocol-enforceable "do not delegate" flag onto any form of authority, whether it be an ocap or an ACL. If Alice tells Bob that Bob, and Bob alone, has been granted access to this tool, we should realize that as long as Bob wants to cooperate with Mallet and has communication access to him, he can always set up a proxy that can forward requests to Alice's tool as if they were Bob's.

We are not endorsing this, but we are acknowledging it. Still, there is something we can do: we could wrap Bob's access to Alice's tool in such a way that it logs that this is the capability Alice handed to Bob being invoked every time it is invoked, and disable access if it is misused… whether due to Bob's actions, or Mallet's. In this way, even though Alice cannot prevent Bob from delegating authority, Alice can hold Bob accountable for the authority granted to him.

If we do not take this approach, we expose our users to harm. Users may believe their privacy is intact and may be unprepared for the point at which it is violated, and so on and so on.

We must not claim we can prevent what we can not. This will be a guiding principle for the rest of this document.

Anti-solutions

In this section we discuss "solutions" that are, at least on their own, an insufficient foundation to solve the pressing problems this paper is trying to resolve. Some of these might be useful complementary tools, but are structurally insufficient to be the foundation of our approach.

Blocklists, allow-lists, and perimeter security

"With tools like access control lists and firewalls, we engage in 'perimeter defense', which is more correctly described as 'eggshell defense'. It is like an eggshell for the following reason: while an eggshell may seem pretty tough when you tap on it, if you can get a single pinhole anywhere in the surface, you can suck out the entire yoke. No wonder cybercrackers laugh at our silly efforts to defend ourselves. We have thrown away most of our chances to defend ourselves before the battle even begins." – Marc Stiegler, E in a Walnut

Blocklists and allow-lists appear, at first glance, to be a good foundation for establishing trust or distrust on a social network. Unfortunately, both solutions as a foundation actually shake the structure of the system apart after long enough.

This isn't to say we aren't sympathetic to the goals of block-lists and allow-lists, but that they don't work long term. In order to understand this, we need to look at the problem from several sides.

The Nation-State'ification of the Fediverse

Part of the major narrative of the federated social network at the moment is that running an instance is an excellent opportunity to host and support a community, maybe of people like you or people you like. Different rules may apply differently on different instances, but that's okay; choose an instance that matches your personal philosophy.

So you run an instance. On your instance, maybe some bad behavior happens from some users. You begin to set up policies. You perhaps even ban a user or two. But what about bad behavior that comes from the outside? This is a federated social network, after all.

Blocking a user is fine. Blocking an instance or two is fine. But what happens when anyone can spawn a user at any time? What happens when anyone can spawn an instance at any time? Self-hosting, which originally seemed like something to aspire to, becomes a threat to administrators; if anyone can easily spawn an instance, host administrators and users are left playing whack-a-mole against malicious accounts. It seems like our model is not set up to be able to handle this.

Soon enough, you are tired of spending all your free time administrating the instance blocklist. You begin to set up the ability to share automatic blocklists between friends. But the governance of these lists seems fraught at best, and prone to in-fighting. Worse yet, you seem to have improperly gotten on several blocklists and you're not sure how. The criteria for what is and isn't acceptable behavior between instances varies widely, and it's unclear to what extent it's worth appealing.

It dawns on you: the easier approach isn't a deny-list, it's an allow-list (aka a whitelist). Why not just trust these five nodes? It's all you have energy for anymore.

Except… what if you aren't one of the five major nodes? Suddenly you see that other nodes are doing the same thing, and people are de-federating from you. It's not worth running a node anymore; if you aren't on one of the top five… hold up… top three instances anymore, nobody gets your messages anyway.

This is the "nation-state'ification of the fediverse", and it results in all the xenophobia of nation-states traditionally. Sure, border guards as a model on the fediverse aren't as bad as in the physical world; they can't beat you up, they can't take your things (well, maybe your messages), they can't imprison you. And yet the problem seems similar. And it's only going to get worse until we're back at centralization again.

A fundamental flaw occurred in our design; we over-valued the role that instances should play altogether. While there is nothing wrong with blocking an instance or two, the network effect of having this be the foundation is re-centralization.

Where do communities really live?

Furthermore, it doesn't even reflect human behavior; few people belong to only one community. Alice may be a mathematics professor at work, a fanfiction author in her personal time, and a tabletop game enthusiast with her friends. The behaviors that Alice exhibits and norms of what is considered acceptable may shift radically in each of these communities, even if in all of these communities she is Alice. This isn't duplicitous behavior, this is normal human behavior, and if our systems don't allow for it, they aren't systems that serve our users' needs. But consider also that Alice may have one email account, and yet may use it for all three of these different communities' email mailing lists. Those mailing lists may be all on different servers, and yet Alice is able to be the right version of Alice for each of those communities as she interacts with them. This seems to point at a mistake in assumptions about the federated social web: the instance is not the community level, because users may have many varying communities on different instances, and each of those instances may govern themselves very differently.

Not only a social problem, but a security problem too

So far the problems with "perimeter security" described above have been examples restricted to the social level. As it turns out, perimeter security has another problem when we start thinking about authorization called the "confused deputy problem". For example, you might run a local process and consider that it is localhost-only. Whew! Now only local processes can use that program. Except now we can see how "perimeter security" is "eggshell security" by how easy it is to trick another local program to access resources on our behalf. An excellent example of this where Guile's live-hackable REPL suffered a remote execution vulnerability. Except… Guile didn't appear to "do anything wrong", it restricted its access to localhost, and localhost-only. But a browser could be tricked into sending a request with code that executed commands against the localhost process. Who is to blame? Both the browser and the Guile process appeared to be following their program specifications, and taken individually, neither seemed incorrect. And yet combined these two programs could open users to serious vulnerability.

Perimeter security is eggshell security. And the most common perimeter check of all is an identity check, the same paradigm used by Access Control Lists. It turns out these problems are related.

Access Control Lists

Up until recently, if you drove a car, the car did not determine whether you could drive it based on who you are, as your identity. If you had a key, you could drive it, and it didn't matter who you were.

Nonetheless, since Unix based its idea of authority on "who you are", this assumption has infected all of our other systems. This is no surprise: people tend to copy the models they have been exposed to, and the model that most programmers are exposed to is either Unix or something inspired by Unix.

But Unix uses ACLs (Access Control Lists), and ACLs are fundamentally broken. In no way do Access Control Lists follow the Principle of Least Authority (PoLA), which is necessary for users to be able to sensibly trust their computing systems in this modern age.

To be sure, we need identity verification when it is important to know that a certain entity "said a particular thing", but it is important to understand that this is not the same as knowing whether a particular entity "can do a certain thing".

Mixing up identity verification with authorization is how we get ACLs, and ACLs have serious problems.

For instance, consider that Solitaire (Solitaire!) can steal all your passwords, cryptolocker your hard drive, or send email to your friends and co-workers as if it were you. Why on earth can Solitaire do this? All the authority it needs is to be able to get inputs from your keyboard and mouse when it has focus, draw to its window, and maybe read/write to a single score file. But Solitaire, and every other one of the thousands of programs on your computer, has the full authority to betray you, because it has the full authority to do everything you can… it runs as you.

And that's not even to mention that ACLs are subject to the same confused deputy problems as discussed in the previous section. In this paper we'll lay out how ocaps can accomplish some amazing things that ACLs could never safely do… because ACLs Don't.

Content-centric filtering

When spam began to become a serious problem for email, Paul Graham wrote a famous essay called A Plan for Spam. The general idea was to use content filtering, specifically Bayesian algorithms, to detect spam. At the time of this article's release, this worked surprisingly well, with the delightful property that spammers' own messages would themselves train the systems.

Fast forward many years and the same fundamental idea of content filtering has gotten much more advanced, but so have the attacks against it. Neural networks can catch patterns, but also can also increasingly generate hard to detect forms of those same patterns, even generating semi-plausible stories based off of short prompts. While most spam sent today is sent using what we might call "amateur" methods, possible sophisticated attacks are getting worse and worse.

To add to this problem, false-negatives from these systems can be disastrous. YouTube has marked non-sexual LGBT+ videos as "sensitive", and many machine learning systems have been found to pick up racist assumptions from their surrounding environment (and other forms of "ambient bigotry" from the source society's power dynamics as well, of course).

This isn't to say that content filtering can't be a useful complement; if a user doesn't want to look at some content with certain words, they should absolutely free to filter on them. But content filtering shouldn't be the foundation of our systems.

Reputation scoring

Reputation scoring, at the very least, leads us back to the problems of high-school like pandering for popularity. At the very worst, it results in a credit system that is disproportionally racist.

The effort to categorize people based on their reputation is of increased interest to both large companies and large governments around the world. An ongoing initiative in China is named the Social Credit System. The effect of a reputation hit can be wide-spread and coercive. We need to do better.

Going back to centralization

After reading all this, one might be tempted to feel like the situation is hopeless. Perhaps we ought to just put power back in the hand of central authorities and hope for the best. We will leave it to our readers to look back at the problematic power structures that probably lead them to examine distributed social networks in the first place to see why this won't work. But at the very least, companies don't have a good history of standing up for human rights; if the choice is between doing business in a country or not violating its citizens' rights, most companies will seek to maximize value for their shareholders.

A way forward: networks of consent

Don't give up hope! There is a way out of this mess, after all. It lies with the particular use of a security paradigm called "object capabilities" (or "ocaps"). We will get into the technicality of how to implement ocaps in How to build it but for now, let's think about our high-level goals.

The foundation of our system will rely on establishing trust between two parties. If Alice trusts Carol to be able to perform an action, she might "give consent" to Carol. However, giving consent to Carol is not necessarily permanent; Alice has the tools to track abuse of her resources, and if she sees that Carol is irresponsible, she can revoke her consent to Carol. (While Carol could have handed this authority to someone else, Alice would still see the abuse coming from the access she handed Carol, and could still hold Carol responsible.)

What about users that do not yet trust each other? If Alice does not yet know or trust Bob, it is up to Alice's default settings as to whether or not Bob has any opportunity to message Alice. Maybe Alice only gets messages from entities she has existing relationships with.

However, it is possible that Alice could have a "default profile" that anyone can see, but which bears a cost to send a message through. Perhaps Bob can try to send a message, but it ends up in a moderator queue. Or, perhaps Bob can send Alice a message, but he must attach "two postage stamps" to the message. In this case, if the message was nice, Alice might refund Bob one or both stamps. She might even decide to hand him the authority to send messages to her in the future, for free.

But say Bob is a spammer and is sending a Viagra ad; Alice can keep the stamps. Now Bob has to "pay" Alice to be spammed (and depending on how we decide to implement it, Alice might be able to keep this payment). There is always a cost to unwanted messages, but in our current systems the costs lie on the side of the receiver, not the sender. We can shift that dynamic for unestablished relationships. And critically, it is up to Alice to decide her threshold: if she is receiving abusive messages, she can up the number of stamps required, disable her public inbox entirely, or hand over moderation to a trusted party during a particularly difficult period. But even if she disables her inbox, the parties which have existing trust relationships with Alice can still message her at no cost.

While we do not claim that we can fully model a system of consent in this system, we can provide the maximum amount of consent that is possible to represent in a system. Hopefully that is far enough; it would certainly be better than what we have now.

This document does not specify particular mechanisms but opens up several opportunities. It is up to the community to decide which routes are considered acceptable. But the framework for all of this is object capabilities.

Must we boil the ocean?

All this sounds fine and well, but we are pressed with a problem: we already have ActivityPub implementations in the wild, and those implementations filled in the holes in the spec in the best ways they knew how.

We do not want to have to throw away the network we have. As such, this document does not try to solve all possible problems. For example, a Webfinger-centric interface is roughly incompatible with Tor onion services, even if supporting such services would be desirable and could be more easily accomplished with a petname system. (Imagine trying to complete the Webfinger address of a user that is using a v3 tor onion service address!) As such, this document simply assumes the possibility that we will live with Webfinger-based addresses for now, even if not optimal for the long term.

Incremental improvement is better than no improvement at all, and there is a lot we can make better.

Understanding object capabilities (ocaps)

The foundation of our system will be object capabilities (ocaps). In order to "give" access to someone, we actually hand them a capability. Ocaps are authority-by-possession: holding onto them is what gives you the power to invoke (use) them. If you don't have a reference to an ocap, you can't invoke it.

Before we learn how to build a network of consent, we should make sure we learn to think about how an ocap system works. There are many ways to build ocaps, but before we show the (very simple) way we'll be building ours, let's make sure we wrap our heads around the paradigm a bit better.

Extending the car key metaphor

We equated this to car keys before; the car doesn't care who you are, it only cares that you turn it on using the car key. (Note: though capabilities can also be built on top of cryptographic keys, we're strictly talking about car keys for the moment.)

We can extend the car-key metaphor further, and find out there are some interesting things we could do:

- delegation: We could hand the car key to a friend. We could even make a copy for a trusted friend or loved one.

- attenuation: We could hand out a car key that is more limited than the more powerful one we have. For example, if we go somewhere with full-service-parking, we can construct and hand the valet a "valet key" that only permits driving five miles and won't open the glove box or trunk. Sorry kid, you're not getting a joy ride this time.

- revocation: Let's say that Alice wants to allow her roommate Bob to drive her car, but she also wants to be able to take away that right if Bob misuses it or if they stop being roommates. Alice can make a new car key that has a wire inside of it; Alice holds onto a device where if she presses the button, the key "self destructs" (ie the wire melts). Now Alice can stop Bob from being able to drive the car if she wants to (and if she does, it'll also disable access to anyone else Bob has delegated a key to).

- accountability: Alice has multiple roommates, and while she would like to allow them all to drive her car, the next time someone spills a drink in the car and doesn't clean it up, she wants to know who to blame by seeing who drove the car last. Alice can achieve this via composition: she installs a separate "logging" panel into the dashboard of her car, to which she has the capability to view the logs. Next, for the keys that she hands her roommates, she composes together access to drive the car with the logging service and associates each key with her roommate's name. Now each time one of her roommates uses one of these keys, the logging console (which only Alice has access to) takes note of the associated name, so Alice can check who left a mess last.

You may have noticed that as we went further in the examples, the ability to construct such rich capabilities on the fly seemed less aligned with the physical car key metaphor (not that it wouldn't be possible, but certainly not with such ease, and certainly not with any cars that exist today). And yet ocap systems easily do give us this power. We will take advantage of this power to construct the systems we want and need.

Ocaps meet normal code flow

The nice thing about object capabilities is that they permit the "Principle of Least Authority": we can hand only as much authority as is needed to complete a task.

We saw this earlier, in contrast to solitaire. If we thought about solitaire as being a procedure, in contemporary operating systems, running it would look like so:

// Runs with the full "ambient authority" of the user.

// We don't need to pass in permissions, because it can already

// do everything... but that includes crypto-lockering our

// hard drive, uploading our cryptographic keys and passwords,

// and deleting our data.

solitaire();By contrast, the ocap route would look like so:

// We explicitly pass in the ability to read input from the

// keyboard while the window has focus, to draw to the window,

// and to read/write from a specific file.

solitaire(getInput, writeToScreen, scoreFileAccess);In fact, Jonathan Rees showed that ocaps are just everyday programming, and with a few adjustments programming languages can be turned into ocap systems.3 (For example, instead of modules reaching out and grabbing whatever they want to import, we can explicitly pass in access just as we pass in arguments to a function.)

This may seem overwhelming. How do ocaps get to all the right places then? Thinking about ocaps as normal programming flow suddenly makes things make more sense: how do values that come from all the way on that side of our program get all the way to this side of our program? It turns out that most data passed around in programs doesn't come from ambient user environments or global variables, it comes from normal argument passing. This should help us increase our confidence that we can use ocaps without them being a burdensome part of our programming workflows; they are, in fact, very similar to how we program every day.

Ocaps meet social relationships (or: just in time authority)

Some systems that try to confine authority today are called "sandboxes". If you have had experience with present-day sandboxes, you might be skeptical, based on those experiences, that an ocap type system will work. That would be understandable; in many such systems a user has to pre-configure all the authority that a sandboxed process will need before the process even starts up. Almost inevitably, this authority doesn't end up being enough. Time and time again, the user opens the sandboxed process only to find that they have to "poke another hole" in the system. Eventually they let too much authority through; out of frustration, the user might simply pass through nearly everything.

Thankfully, ocaps don't have this problem. Unlike many traditional sandbox systems, we can pass around references whenever we need them… authority can be handed over "just in time".

This is less surprising if we consider the way passing around ocap references resembles the way people develop social relationships. If Alice knows Bob and Alice knows Carol, Alice might decide it is useful to introduce Bob to Carol. We see this all the time with the way people exchange phone numbers today. "Oh, you really ought to meet Carol! Hold on, let me give you her number!"

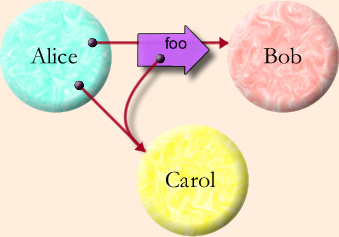

One of the Granovetter Diagrams shown in Ode to the Granovetter Diagram. Pardon the geocities-era aesthetic.

In fact, thinking about such social relationships have long been at

the heart of ocap systems.

One of the most famous (and informative) ocap papers is one called

Ode to the Granovetter Diagram (a truly remarkable paper which shows

how many complicated systems, including basic money and financial

transaction infrastructure, can be modeled on ocaps).

In this paper "Granovetter Diagrams" such as the above are introduced,

showing how ocaps flow through a system by social introductions.

In fact the above diagram is pretty much exactly the same as our phone

number exchange… "Alice is sending Bob the message foo, which

contains a reference to Carol, and now Bob has been introduced to /

has access to Carol."

It turns out that Granovetter Diagrams have their origin in sociology, from a famous paper by Mark S. Granovetter named The Strength of Weak Ties. It's good news that much of thinking about ocaps has been based on how human relationships develop in sociology, since we are now about to use them to build a robust social network.

How to build it

Ocaps we can use in our protocols

Ocaps as capability URLs

That's all good and well, but real-world metaphors

Ocaps as bearcaps

Ocaps meet ActivityPub objects/actors

The power of proxying

True names, public profiles, private profiles

Rights amplification and group-style permissions

MultiBox vs sharedInbox

Limitations

Future work

Petnames

Conclusions

The technology that ActivityPub uses to accomplish this is called json-ld and admittedly has been one of the most controversial decisions in the ActivityPub specification. Most of the objections have surrounded the unavailability of json-ld libraries in some languages or the difficulty of mapping an open-world assumption onto strongly typed systems without an "other data" bucket. Since a project like ActivityPub must allow for the possibility of extensions, we cannot escape open-world assumptions. However, there may be things that can be done to improve happiness about what extension mechanism is used; these discussions are out of scope for this particular document, however.

Or more accurately, since users may appoint someone else to manage posting for them, "was this post really made by someone who is authorized to speak on behalf of this entity".

You may have been wondering, does the word "object" in "object capabilities" mean that our programs have to be particularly "object oriented"? First of all, no, ocaps can be implemented in what contemporarily may be perceived as very non-OO functional systems. Second, object oriented is a very vague term. Third, "object capabilities" used to be just called "capabilities", but other computing systems started to implement things which they called "capabilities" which have nothing to do with the original definition of "capabilities" (eg, Linux's kernels are closer to ACLs than they are ocaps), so the name "object capability" was chosen to distinguish between the two.